10 cognitive biases to avoid in User Research (and how to avoid them)

Cognitive biases have become quite popular in mainstream culture in the last decade, thanks to books like Thinking Fast and Slow and Predictably Irrational. Along with human centered approaches, it has also gained quite a lot of prominence in Experience/Business design.

Since we have come to rely more and more on quantitative and qualitative research to take informed product/business decisions, it’s also important to ensure that the data and its method of collection is not impaired by an ignorance of cognitive biases, so as to provide meaningful value to end customers.

There are more than a 100 of them (188 according to this exhaustive infographic), but for the context of User Research, I’m going to focus on 10 of them and give examples/anecdotes on why and how to avoid them.

1. Framing effect

This is one that I have seen most repeatedly occurring in the context of User Research and one which is also tricky to avoid if you don’t pay close attention to your words and actions.

What is it?

Simply put, human beings don’t make choices in isolation. We are highly dependent on the way it is presented to us. A simple example would be this — A big meal on a small plate is more fulfilling than a small meal on a big plate. The empathy map is designed to help overcome this bias by including what we see and hear along with what we do and say.

Example

When you are presenting prototypes or asking users about their experiences while using a product, be careful about how you frame the question.

A question such as “What did you like/dislike while using this product” can cause the users to only focus on the positives/negatives of the product (even for the rest of the interview duration) and it might lead to false positive/negative insights.

A neutral, non-indicative way of questioning, such as “Can you describe the last time you used it?” or “How do you feel when you use this product?” can yield better-unbiased results.

2. Confirmation Bias

This is a super-villain of biases. Extremely common and difficult to rectify. But it is one of the biggest goof-ups that researchers can make, and is often used by proponents of quantitative research to justify why it is better to go with large data sets.

What is it?

We like data that confirms our existing hypothesis/beliefs and discard those which challenge them. This is an evolutionary safety net that has been programmed into us, to protect our brains from the threat of opposing information which challenges our identity (we evolved as tribes with shared beliefs). That makes it very difficult to get rid of it completely.

Example

A typical example would be a researcher/product manager asking the participant — “Have you ever done/considered doing Action X through App Y”, and the participant says, “Yes! All the time!”. Hooray! you have hit a jackpot and your evidence is confirmed. Users love taking that action through your app. But in reality that might be far away from the truth.

When you get an affirmation/positive response for something, check/recheck in several different ways. Why did the user take that action? Was it because they did not have other options? Did they like the process? How many times in the last week did they do it? Can they show any evidence? Is there a possibility they might just be wanting to please you?

The best way to avoid confirmation bias is to play devil’s advocate to your thoughts and hypothesis consistently during the research process.

3. Hindsight Bias

Human beings are really bad at thinking in time. And hindsight bias stands testimony to it.

What is it?

In order to create synchronicity and order to the ebb and flow of time, we try and find reasons for the events that happened in our past, without having any factual evidence for it. It is often called the “I knew it all along” phenomenon.

Example

When we conduct research, we often ask users to dig into their past to find examples and anecdotal pieces of evidence. And often when we dig deep into the why’s, we hear several reasons about how they faced certain difficulties/took certain actions.

For e.g: A user was complaining about his business not running well due to the oncoming of the internet and people buying things online rather than coming to the stores. So when I posed the counter question of why he was not getting into e-Commerce, I received a rather surprising answer: “The websites are not taking good care of customers and if there is any damage/problem with our product it reflects badly on our name”. It was a clear indication that he was not really aware of how e-Commerce works and the fact that customers had the option to return damaged items and provide reviews to sellers.

Customers can never be blamed for making up such reasons, but it’s really important for interviewers to be aware of them and constantly double check the evidence to support their statements/anecdotes.

As a researcher, this also means that when we don’t have all the answers to a certain aspect of what we were supposed to uncover, we need to admit that instead of covering up with false reasons.

4. Social Desirability Bias

One word to encapsulate this is, ‘SELFIES’

What is it?

We are social animals, and this means that our actions and words are presented in a way that makes us look good amongst others, even though they might be inaccurate. This is so deep-rooted in our behavior that we even disdainfully label those who don’t follow these norms as anti-social.

Example

This bias particularly comes up when you are researching on a topic which has a social capital associated with (social media, online platforms, matchmaking services etc.)

For e.g: A middle-aged social media user who wants to portray an image of a leader among his/her family. He/She would never post or say something that might hamper that image, and hence even if there is a usability issue and the user is finding difficult to navigate through the app, they might still not complain about it. A skilled researcher would take the efforts to re frame the questions in a way as to boost their social desirability (for e.g: If you could design this better for your dad/mom, what would you do?). This could give clues as to issues they really find annoying but never speak up about.

5. Sunk Cost Fallacy

Again one of the most common biases. Causes a lot of damage not only in research but also in major life choices (marriage/debt etc.)

What is it?

Our decisions are tainted by the emotional investments we accumulate, and the more we invest in something the harder it becomes to abandon it. In other words, the deeper we get into the maze the harder it becomes to come out (subtle Westworld reference). The next time you end up drinking ‘just one drink more’ and get passed out or keep calling with a bad hand in poker, you know which bias to blame.

Example

As researchers, we invest a lot of time into conducting research and collecting data. Over a period of time, this data can become a burden rather than being helpful. Obsessing over the findings, we can easily get lost and miss the bigger picture of what we really need to achieve and deliver.

In order to avoid this bias, it is important to balance our efforts and rewards. This means breaking down the research into smaller chunks and having go/no-go decisions after each of those chunks. The Lean Startup methodology is in a way aimed at reducing this bias, by forcing entrepreneurs to run small experiments and objectively test the results, rather than spending time and effort to eventually reach a futile conclusion.

It needs a mindset to ultimately get acclimatized to and become at peace with the fact that losses and failures are an inevitable part of life.

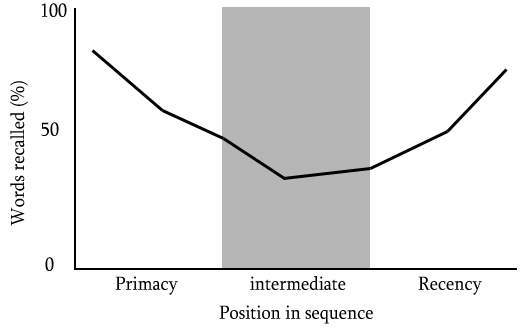

6. Serial-Position Effect

The U-shaped destiny of long lists.

What is it?

If your name starts with an M and your name is part of an alphabetically ordered list, then sorry to say but your name likely won’t be noticed.

This list illustrates the % of word recall compared to its position in a sequence. Due to the way our memory gets constructed, we pay more attention to the earlier and later parts of long lists.

Example

In tasks such as card sorting, this bias can lead to users omitting or ignoring the middle elements, which might hamper the effectiveness of the activity/experiment.

An interesting case of this bias affecting your studies is in continuous field studies, where you go out on the field to interview more than 5–10 users on a stretch before getting to synthesis. Where it gets tricky is when you tend to overemphasize observations from the first and last interviews more if you place them in the order (on sticky notes/word docs).

A good way to get around is to break them down into smaller chunks or randomize the arrangement a few times to nullify the bias.

This bias can also creep into things such as to-do-lists and feature lists, especially for those who are in charge of getting many things done at the same time. Categorization is a highly effective strategy to counter this.

7. The illusion of transparency

A dangerous bias that leads to misinterpretation and miscommunication

What is it?

If you have ever played charades, you already know this bias. We tend to overestimate the extent to which others know what we are thinking/trying to convey.

Most of our subjective experience is not observable, and we tend to overestimate how much we telegraph our inner thoughts and emotions.

Example

In interviews, many participants try to convey their emotions through body language, pauses, and other non-verbal cues. The illusion of transparency makes it difficult for them to really know whether the message is being conveyed rightly.

Which means that we need a different mechanism to figure out whether we are missing out on some of these aspects which might not be caught in the interview. This is why providing affirmative feedback is important. It can be as simple as, “So from what you said I feel like you are feeling this way about this feature, pardon me if I’m wrong.”

Often you would be surprised to know that whatever they said in the first place was interpreted by you in a completely different manner.

8. Clustering Bias

Can lead to a lot of false positives and false negatives in cognition

What is it?

Clustering bias is what happens when we describe someone as having a ‘hot streak’, such as a night of poker or a football tournament where everything seems to go right for the player. But in a short streak of random events, a wide variety of probabilities are expected, including some streaks that seem highly improbable.

Example

As researchers, finding patterns in data is bread and butter for us. But a drawback of qualitative analysis is that with such a short sample size, it is often impossible to avoid seeing patterns that might be just smaller sets of randomness that appear to have a commonality. An effective tool to counter it is to triangulate patterns, and to match data-driven insights based on large sample sizes with the deeper insights found in qualitative research.

It can be also reduced by conducting research and prototyping with completely different and diverse sets of users.

Another way of avoiding it is to have silent brainstorming before discussing patterns and include a set of diverse stakeholders in the analysis process so that the bias has a better chance of getting canceled/evened out.

9. Implicit Bias

Again a really tricky and dangerous one. Often termed as stereotyping in popular culture.

What is it?

These are our attitudes and stereotypes we associate to people without our conscious knowledge. Implicit Bias is really difficult to root out since it has been embedded into our consciousness from a very young age through media, people around us and popular culture.

Example

One of the most prominent examples of implicit bias is that of police officers associating black people with crimes without realizing they are doing it.

In the context of user research, it can happen when we talk to people from certain demographic, racial or ethnic groups of whom we already have preconceived notions and generalizations about. It can lead us to behave in certain ways which might not be totally necessary (such as being overly polite to disabled people when they would rather be treated like a normal person). A good practice to avoid this would be to write down all the preconceived notions about the person before going into the interview, and knowing as little as possible about them before speaking to them.

It’s always important to always remember that as a good researcher, our duty is not to be socially appealing or to become friends with our users but to really understand what is going on inside their mind and how they think, even if it that means there needs to be certain uncomfortable awkward silences or small disagreements.

10. Fundamental Attribution Error

A favorite among designers and usability enthusiasts, it is what happens when people blame themselves for not being able to understand technology

What is it?

It is the tendency of people to overemphasize personal characteristics and ignore situational factors in judging others’ behavior. Because of the fundamental attribution error, we tend to believe that others do bad things because they are bad people. We’re inclined to ignore situational factors that might have played a role.

Example

When you conduct usability tests and you hear a user talking about making a ‘mistake’ doing a certain task, pay attention! That might be the biggest clue towards creating a better product/experience.

Really good products make the user think less and get more done. Think of any app/website where you blame yourself for not being able to do a certain thing right. There is an opportunity for improvement.

A good way to avoid this bias is to also to complement interviews with observations/heat maps. They provide a better account of how researchers use the product and the kind of errors they commit due to poor design. It’s common among engineers to also push the blame on to users and guide them on the correct usage. But as researchers, we need to fight this notion. Products and their learning curves need to be designed in a way that even a beginner can pick them up with a few tries and not feel guilty about failing.

In summary, these are the 10 biases you can take away to improve your research practice

- Framing effect — Framing of inquiries can influence responses

- Confirmation Bias —Humans tend to only look for evidence confirming their hypothesis

- Hindsight Bias —Humans always find reasons for their actions in the past

- Social Desirability Bias — Humans tend to speak in a way that makes them look good

- Sunk Cost Fallacy — Humans tend to stick on longer to their losses than they should

- Serial-Position Effect —Humans tend to value items at the end/beginning of lists more

- Illusion of transparency — Humans tend to overestimate the extent to which others know what they are thinking

- Clustering Bias — Humans tend to find patterns amidst randomness, when there are really none

- Implicit Bias — Humans have implicit associations about certain groups and their behavior

- Fundamental Attribution Error —Humans tend to attribute errors to internal characteristics even when it is situational/caused by an external forces