Part 5

Designing responsibly with AI: how designers & technologists perceive ethics

Part 5 of the series ‘Designing responsibly with AI’ to help design teams understand the ethical challenges when working with AI and have better control over the possible consequences of their design on people and society.

Recap of previous parts

As part of the series ‘Designing Responsibly with AI’, this article follows parts 1 to 4, which form the literature review of this research. Here is a recap:

- Part 1 — Introducing ‘Designing responsibly with AI’

- Part 2 — AI today: definition, what is AI for? risks and unexpected consequences on society

- Part 3 — Ethical dilemmas of AI: fairness, transparency, human-machine collaboration, trust, accountability & morality

- Part 4 — Design of Responsible AI: ethics and design, from Human to Humanity Centred Design, imbuing values into autonomous systems

Overview of part 5

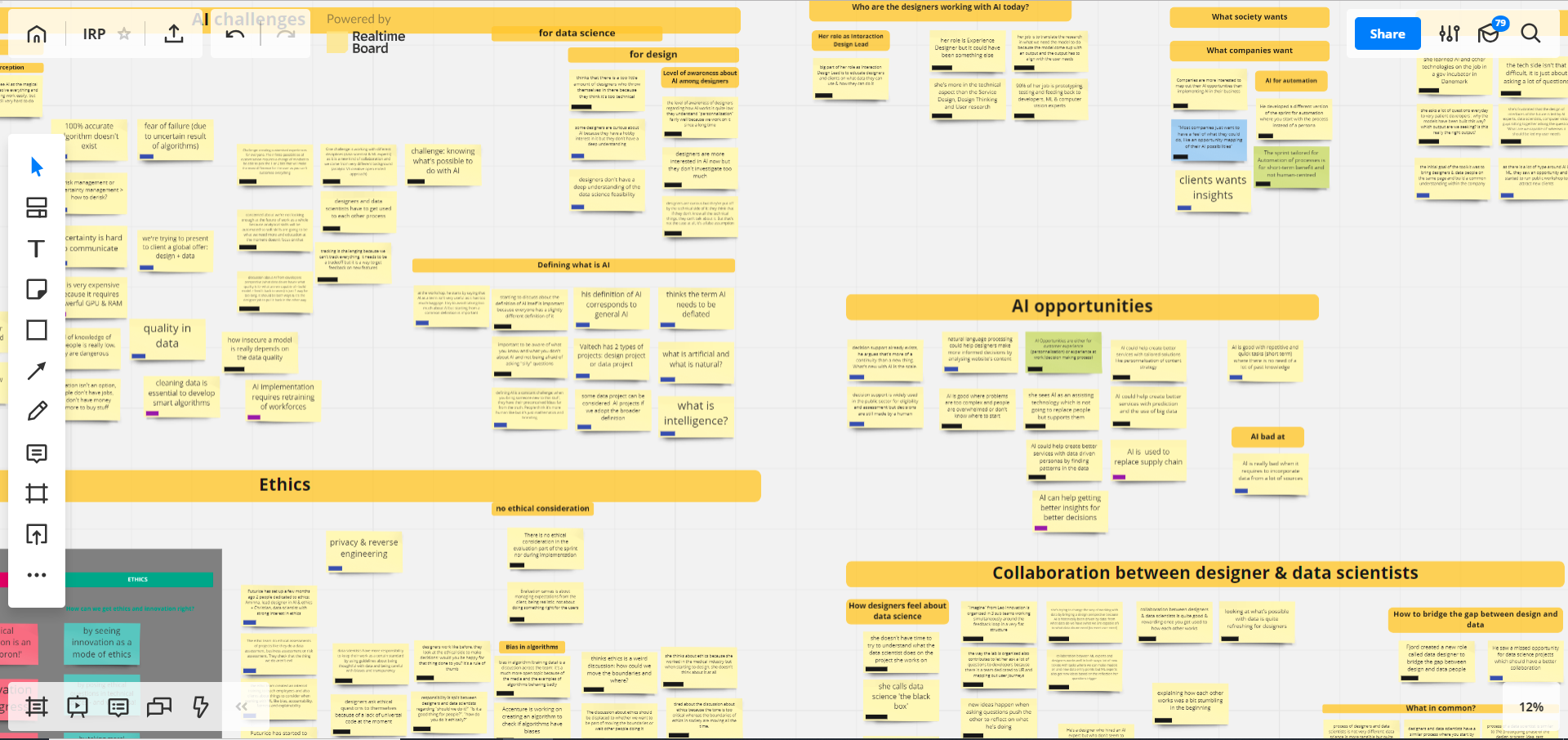

Building on the literature review, this 5th article corresponds to the primary research I’ve done to get answers on the following questions:

- What is the level of awareness about AI?

- How practitioners navigate the complexity of designing with the uncertain nature of AI & ML?

- How is ethics perceived?

- How and when ethical considerations are practically applied during the design process?

- Who is involved in ethical discussions?

Research methodology

This project was conducted over 20 weeks, and all the data were gathered from primary and secondary research.

To gain an understanding of AI ethics and responsible design, a selection of writings from academic journals, industry reports, thought leaders and practitioners were analysed and synthesised in the previous literature review.

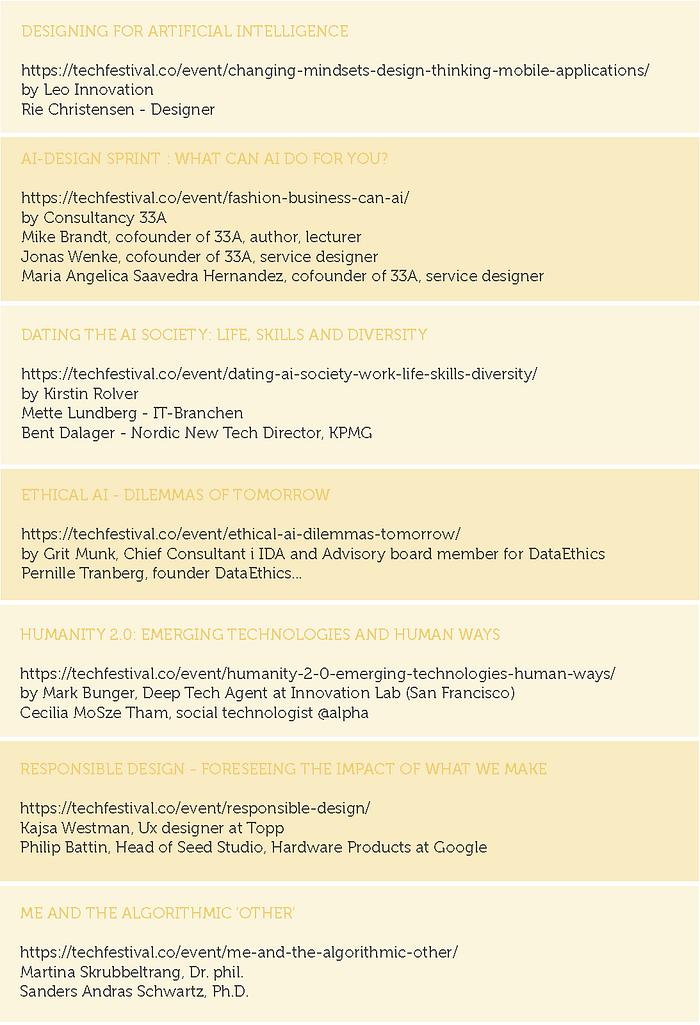

During the study, a four-day immersion at the Techfestival in Copenhagen has allowed attending numerous meetups about AI ethics, AI design and responsible design. It acted as qualitative research to gain a better understanding of these topics from a wide range of industry experts, and as a means to build a network of relevant professionals (Fig. 3.1).

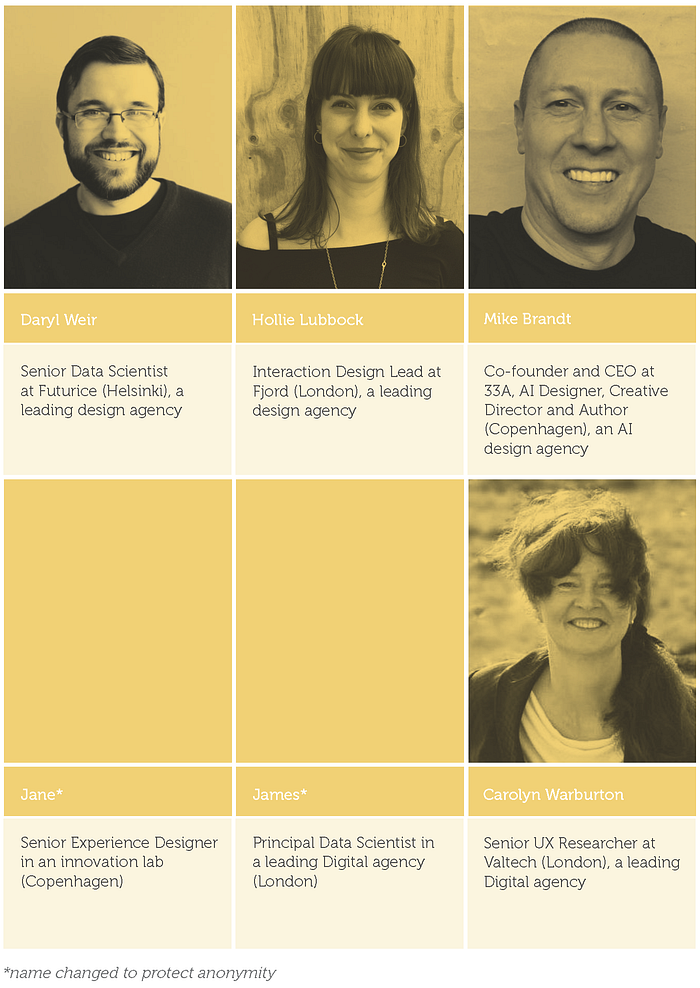

In-depth interviews with senior level designers and data scientists (Fig. 3.2) were conducted to explore their perspective on AI ethical challenges, understand their perception of ethics in design and scrutinise their ethical considerations during the design process when working with AI technology (Fig. 3.3).

Building on the research, a prototype of a process and associated tools were created to test and evaluate the research findings from literature and interviews. The process and toolkit were tested and iterated upon through a combination of feedback from industry experts and potential users. Results were analysed, discussed and reflected upon.

As a researcher, care was taken to ensure an ethical research practice in regards to all the participants interviewed. Recordings of interviews have been collected with consent from participants. Consent forms were obtained by everyone who contributed to the research and anonymity for two interviewees under confidentiality agreements has been respected as requested.

What is the level of awareness about AI?

As explained previously, AI does not have a clear definition and makes people confused about what counts as AI and how it works. However, what is the level of awareness about AI among the people behind the technology? How much ready are the designers and technologists who are developing AI-powered products and services? Moreover, how knowledgeable are the clients who ask design agencies to develop AI-powered products and services?

Every practitioner is confused about what counts as AI and how it works.

Hollie Lubbock (2018) stresses that starting to discuss the definition of AI itself is important because everyone has a slightly different definition of it. When talking with the Principal Data Scientist of a digital engineering agency, his definition of AI corresponded to general AI, the one that does not exist (James, 2018). Daryl Weir (2018) underlines that defining AI is a constant challenge because “when you bring someone new to this stuff, they have their preconceived ideas far from the truth. People think it’s more human-like, but it’s just mathematics and branding”.

Designers are not ready to work with AI.

Weir (2018) explains that before he arrived at Futurice, the level of awareness about AI and ML among designers was shallow as no overlap existed between data science projects and design projects. Lubbock (2018) corroborates it as she defines the level of awareness of designers regarding how AI works as quite low, and further explains that designers understand “personalisation” reasonably well but just because they work on it since a long time.

Designers’ curiosity is put off by the technical aspect of AI.

Lubbock (2018) points up that designers are more interested in AI now, but they do not investigate too much. Weir (2018) underlines that some designers are curious about AI because they have a hobby interest in it but that they do not have a deep understanding of the data science feasibility. Jane (2018) explains that there is too little amount of designers who throw themselves in there because they think it is too technical. Lubbock (2018) comes to the same conclusion and says that designers are curious, but they are put off by the technical side of it, as they think that if they do not know all the technical things, they cannot talk about it, whereas it is a false assumption.

Clients do not fully understand what AI can or cannot do.

Regarding clients, Lubbock (2018) explains that lots of people see AI as the magical saviour that will solve everything and make everything work easily, whereas it is still tough to do. Weir (2018) illustrates this fact by explaining that clients who want to automate a process often bring a few thousands of examples of behaviours whereas it needs ten thousand or even more to get good performance.

Top-notch design firms have barely started initiatives to increase the level of awareness about AI.

As the general level of awareness about AI among design practitioners and clients can be defined as low, what top-notch design agencies are doing to bridge the gap? At Fjord, the Dock, the research and development branch of Accenture in Dublin, has developed a half-day workshop to train the other studios, they created a new role called ‘Data Designer’, and a big part of Lubbock’s role is to educate designers and clients on what data they can use and how they can do it (2018). At Futurice, Weir (2018) explains that the firm tries to bridge the gap in the other way by providing a crash course in Service Design for everyone in the company, and recently created an ethics team to develop internal training to teach employees and also clients about things to consider when working with ML like bias, accountability, fairness and explainability.

How practitioners navigate the complexity of designing with the uncertain nature of AI & ML?

As previously mentioned, a new interdisciplinary collaboration between designers and data scientists have started to emerge within top-notch design firms like Ideo to face the new challenges of designing products and services alongside algorithms that now shape most of the experience. However, how does this new collaboration work in practice? Moreover, how practitioners navigate the complexity of designing with the uncertain nature of AI and ML?

Designers and data scientists try to collaborate.

Introducing data scientists in Human-Centred Design teams questions what the new paradigm for collaboration is. Should designers be involved in the most technical stuff or should it be left to data scientists? Carolyn Warburton (2018) sees data science as some magical black-box and thinks she does not have time to try to understand what the data scientist does on the project she works on as she feels she cannot know everything. Lubbock (2018) explains that explaining how each other works was a bit stumbling in the beginning, but once everyone gets used to each other work, the collaboration between designers and data scientists is enjoyable and rewarding. While Lubbock (2018) thinks that looking at what is possible with data is quite refreshing for designers, Jane (2018) thinks that collaboration works well in both ways as ML experts also get new ideas inspired by their reflection on the new questions asked by designers. Weir (2018) points up that it is good to have a data scientist in the team to evaluate ideas after ideation over a “prioritisation session” to look at the business impact and the data science feasibility. Mike Brandt (2018), who just launched an AI design sprint to develop new service ideas with his clients in just half a day, keeps each discipline separated: designers facilitate the workshop with clients and the AI expert evaluate the ideas after to find the right algorithm for the right data. Weir (2018) thinks that having a common language is the hard part in the collaboration as there are so many terms and so many competing interests. He thinks that it makes it hard to ensure that everyone knows what the other means as it is domain specific stuff (Weir, 2018).

Design teams try to evaluate ideas and manage risks.

As ML algorithms are inherently uncertain and never 100% accurate, practitioners evaluate ideas and manage risks. Jane (2018) explains that ML experts map the risks by defining false positives and false negatives, then make recommendations. Lubbock (2018) highlights that in some cases, the false positives are really damaging, and sometimes they are not that bad.

“It is about understanding whether it is going to be a catastrophic failure if your algorithm fails or not, then treat your design solutions quite differently depending on it”.

She further clarifies that

“every time you start to personalise or customise something, you may make it worse for other people depending on how the customisation is done”,

so evaluating the pros and cons for each bit is necessary. As presented earlier, the confusion matrix is used for risk assessment, but Weir (2018) reveals that they use it for measuring the impact of errors for both users and the business. He further explains that sometimes the assessment kills an idea because it is too risky (for legal implications, or harmful for users) or because it might be good for the user but not for the business, so it is good to do the exercise early on. Lubbock (2018) mentions that they use a framework to look at the responsibility and rate the algorithm at different scales. The framework looks whether decision making is done by machines only or human with machines together, and helps to decide who should have the responsibility by testing it with people to see how comfortable they are with training in algorithms. She clarifies that they research whether users are ok or not if it is taking a month before having good results and if they expect them to be right all the time.

Designers use and adapt the tools they know.

While risk assessment is more the territory of data scientists, designers continue to do what they know or adapt some of their tools. Lubbock (2018) stresses the use of feedback loops to learn from the user: “if you look at behaviour change, how do you track that? What does he do next?”. She explains that tracking allows to have new data input and to look at what the algorithm does afterwards. It then enables data scientists to map out how a system behaves by using decision trees which is the most simplistic way: “if the system does this thing, so do this thing instead”. Jane (2018) underlines the importance of doing more testing with users than interviewing them as “you look at future needs that people are not prepared for”. Lubbock (2018) corroborates by saying that experiment and testing with data scientists are incredibly important, especially when it is unknown things like predictions. When clients have lots of data, Weir (2018) indicates their use of data-driven journey mapping. Likewise, Lubbock (2018) further explains that data are added as a new layer in customer journey maps and blueprints which facilitate communication with clients as well.

Practitioners are too optimistic.

While data scientists conduct risk assessments and designers try to learn about the user interaction with the system, Lubbock (2018) reveals that testing of algorithms at scale has not been done and practitioners assume that algorithms will work by focusing on the happy path. She specifies that if it is something AI is good for, like natural language processing or image recognition, it is easier to look at user impact, but when it is deep learning and prediction, it is quite tricky because they have to assume that it is possible and look at user effects whether or not it is possible. Therefore, testing at scale should start earlier to confirm if it is going to work or not before starting the design (Lubbock, 2018). Instead of being naively optimistic when dealing with uncertainty, Brandt (2018) also recommends starting small to have a high chance of success.

How is ethics perceived?

When asking questions about ethics to designers and technologists, reactions are broad and reveals different perceptions.

Ethics is abstract.

In the same way as what counts as AI is unclear, what counts as ethics is also hazy. Jane (2018) perceives it as what society is willing to accept and does not see algorithms inaccuracy as part of ethical concerns because it is related to code. She also refers to her work with AI as aiming to support people and not replacing them, in the same way as Brandt who mentions the problem of AI killing jobs (2018). He also talks about managing client expectations about the algorithm’s accuracy.

Ethics is boring or negatively perceived.

In the foreword of Bowles’s book, Alan Cooper points up that

“ethics has rightfully earned its reputation as a ridiculously boring topic […] Contemporary practitioners find little traction in the world of conventional ethical thinking” (2018).

Likewise, Jane (2018) expresses that she is tired about the discussion about ethics because “it is the same arguments we hear for quite some time” and “the tone is too critical”.

Ethics is rigid, not forward-looking.

In her presentation, Vallor (2018) gives rigidity as one of the reasons why she thinks we got ethics and innovation so wrong. Indeed, for Jane (2018), ethics is a weird discussion because it is always about how we could move the boundaries and where like “is it ethical to get a diagnosis made by an AI?”. She illustrates this observation with the example of selecting baby gender: “five years ago, people were afraid of it, and now, lots of people are travelling overseas to have it done, even in Denmark, it becomes more and more normal”. It shows that the boundaries of ethics are moving too, so she suggests displacing the debate about ethics to whether we want to be part of moving the boundaries or wait for other people to do it.

Ethics is complicated.

Lubbock (2018) mentions that one problem with ethics is that what is right for one culture is not necessarily right for another which makes it very challenging in the current context of a lack of universal code for design.

Ethics is not seen as compatible with business goals.

Vallor (2018) also refers to seeing ethics and innovation as antagonists, as another reason why she thinks we got ethics and innovation so wrong. Lubbock (2018) explains that ethics is complicated because

“sometimes you are kind of blindfolded because you work for a business goal whereas you should step back and ask whether it is the best way to achieve it for the users”.

James (2018) also points out that ethics is hard to communicate with clients.

Human-centred design is seen as doing the work of ethics.

As previously discussed, Brown (cited in Budds, 2017) thinks that human-centred design and design thinking are the answer to problems with AI whereas the approach presents some shortcomings, especially regarding externalities. Vallor (2018) similarly mentions seeing innovation as doing the work of ethics as another problem, where innovation is systematically perceived as progress. Jane (2018) epitomises the remark by explaining that her work was ethical as she takes user needs and data into account when building models, she designs with the users and not for the users, and concludes by saying “we’re not going to replace people, we’re going to support them with AI”.

How and when ethical considerations are practically applied during the design process?

As precedently presented, practitioners use different ways to navigate the complexity of designing with the unpredictable nature of AI whether they consider it as applied ethics or not. However, how and when do they practically apply ethical considerations during the design process of AI-powered products or services?

When considered, ethics is often applied unconsciously in design.

When asked about ethical concerns about inaccuracy in algorithms, Jane (2018) retorts “it is not ethics, it is just code”. Whereas she further explains that “if a machine learning model is not 100% accurate for a suggestion, a possible approach would be to tell the user that is an AI which made the suggestion and make it transparent for the user; we need to translate the model of the algorithm in a way that the user can understand”. This remark against her previous statement shows that she applies the principle of transparency as a “normal” thing to do even for a mere application of AI.

Ethics does not have a transparent process or specific tools; it is a gut feeling.

In her talk about ‘Responsible Design’, Kajsa Westman (2018), UX designer at the design agency Topp, provides a list of questions she asks herself when making decisions during the design process to ensure experiences void of adverse impact. She proposes four categories as a guiding compass for ethical design: matching physical/digital longevity; honest, or deceptive?; could this be harmful?; does this add actual value? Likewise, Lubbock (2018) tries to understand the impact of a solution and make sure to make things better for everyone by asking:

“is it going to improve it? How many people is it going to improve it for? Is it going to make it worse for another group of people?”

She also evaluates if a solution is ethical or not by reflecting how she feels about it when asking herself questions like

“is it upsetting me? Does it feel right? Do I feel comfortable explaining it to someone?”

which refers to the approach of virtue ethics. Weir (2018) mentions that Futurice is in the process of developing its ethical principles but that they do not have a plan yet on how to put them in practice. He indicates that they do not have a process to evaluate long-term consequences and that

“it is more a rule of thumbs where they imagine what would be the worst that can arrive if you are in a filter bubble”.

Lubbock (2018) describes evaluating the impact of design as always very hypothetical, where they take the worst-case scenario, almost like disaster planning, and work back from that, and refers to the process as more like a gut feel at the moment. Her approach seems close to the backcasting technique previously presented. Only Google presents a more structured method to seek the potential negative consequences of their designs. In a talk at the Techfestival, Philip Battin (2018), Head of Seed Studio, Hardware Products at Google, showcases their approach of rehearsing the future by developing design fiction for a field study to inform their portfolio strategy. This last approach involves the participation of users in a contextual enquiry where they can interact with the products in a vision of what the future would look like for Google.

The ethical considerations during the design process have not changed for AI.

Lubbock (2018) observes that designers work like before by looking at the ethical side to make decisions, asking

“would you be happy for that thing done to you?”.

Ethics is mostly considered at the end of the design process after ideation.

Jane (2018) confesses that she only thinks about ethics because she works in the medical industry, but when starting to design, she does not think about it at all. Brandt (2018) explains that they evaluate ideas only after the design sprint because they need participants to “go crazy during the ideation session”. Finally, Weir (2018) mentions that the new ethics team do ethical assessments of projects like they do a data assessment, business assessment or risk assessment,

“they check that the things we do are not evil”.

Who is involved in ethical discussions?

Understanding how ethical considerations are practically applied during the design process triggers the question of who is involved in those discussions. Conscious that my research on the topic is limited as ethical considerations are often applied unconsciously by designers, and, therefore, biased as the responses I got come from industry experts at the forefront of these issues, I will share my findings with hesitation as they are not representative of the industry trends.

Designers and data scientists are equally involved.

“Bias in algorithms (training data) is a discussion across the team: it is a much more open topic because of the media and the examples of algorithms misbehaving. Responsibility is split between designers and data scientists regarding ‘should we do it?’, ‘is it a good thing for people?’, ‘how do you do it ethically?’. Data scientists have more responsibility to keep their work at a certain standard by using guidelines about being thoughtful with data and being careful with biases and transparency” (Lubbock, 2018). Both Weir and Lubbock (2018) stress the importance to have a diverse group of people talking about ethics and potential problems as early as possible, and Weir adds that everyone should have his subject expertise. Vallor (2018) also stresses that ethical questions should be asked in technical spaces and vice versa.

Practitioners are more leading ethical discussions than firms.

“Principles supporting augmentation is more led at an individual level at the moment, but we started to discuss what is appropriate or not in a quite open debate” (Lubbock, 2018).

Users are sometimes involved.

“At the exhibition, we asked people if they felt happy or not to have machines taking decisions. Results: society is quite hesitant at the moment because there are many decisions they are not really aware of” (Lubbock, 2018).

Next articles

If you are interested in this topic, you can read the other articles in this series on the below links:

- Part 1 — Introducing ‘Designing responsibly with AI’

- Part 2 — AI today: definition, what is AI for? risks and unexpected consequences on society

- Part 3 — Ethical dilemmas of AI: fairness, transparency, human-machine collaboration, trust, accountability & morality

- Part 4 — Design of Responsible AI: ethics and design, from Human to Humanity Centred Design, imbuing values into autonomous systems

- Part 5 — Challenges of designing responsibly with AI: how designers & technologists perceive ethics and work with AI (you’re here 👈)

- Part 6 — Challenges of designing responsibly with AI: recommendations for how might ethical considerations be practically applied to the design process

- Part 7 — Designing the application of ethics for AI within the design process: the process and toolkit

- Part 8 — Validating the process and tools for designing responsibly with AI: conclusion and path forward

The end.

Bibliography

The full bibliography is available here.

Before you go

Clap 👏 if you enjoyed this article to help me raise awareness on the topic, so others can find it too

Comment 💬 if you have a question you’d like to ask me

Follow me 👇 on Medium to read the next articles of this series ‘Designing responsibly with AI’, and on Twitter @marion_bayle