Don’t build trust with AI, calibrate it

Designing AI systems with the right level of trust

AI operates on probabilities and uncertainties. Whether it’s object recognition, price prediction, or a Large Language Model (LLM), AI can make mistakes.

The right amount of trust is a key ingredient in a successful AI-empowered system. Users should trust the AI enough to extract value from the tech, but not so much that they’re blinded to its potential errors.

When designing for AI, we should carefully calibrate trust instead of making users rely on the system blindly.

How people trust AI systems

According to Harvard researchers’ meta-analysis, people often place too much trust in AI systems when presented with final predictions. And just providing explanations for AI predictions doesn’t solve the problem. It serves as a signal for AI competence rather than drawing attention to AI mistakes.

Sure, a few wrong turns won’t ruin your journey if you’re just seeking movie or music recommendations. But in critical decision-making situations, this approach can make users “co-dependent on AI and susceptible to AI mistakes.”

The trust issue becomes even more critical with LLM chatbots. These interactions naturally create more trust and even emotional connection.

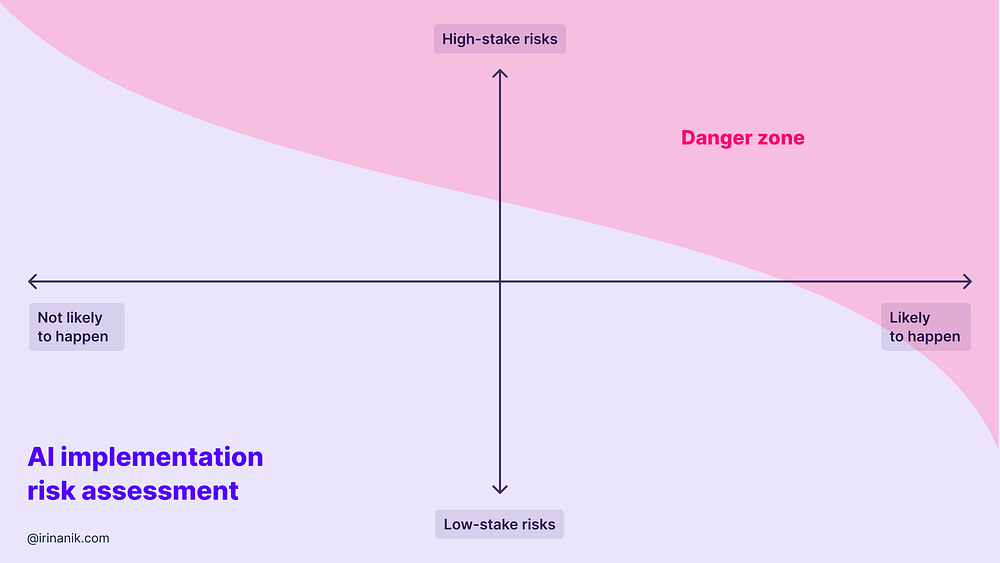

Evaluate risk

Every person involved in the creation of AI at any step is responsible for its impact. The first thing that AI designers have to do is to understand the risk of their solution.

How critical is it if AI makes a mistake, and how likely is it to happen?

If the available training data is biased, messy, subjective, or discriminatory it can almost certainly lead to harmful results. The team's first priority, in that case, should be to prevent this.

For example, a recidivism risk assessment algorithm used in some US states is biased against black people. It predicts a score of the likelihood of committing a future crime and often rates black people higher. This algorithm is trained on data containing years of human mistakes in biased decisions in the justice system.

Take into account that LLM chatbots, with their conversation-like interaction, can be harder to control than single-answer solutions with more restrictive templates.

The risk also depends on the situation and the people who are supposed to use the system. While doctors have the expertise to take some medical AI suggestions into account, it would be dangerous to let teenagers seek mental support in AI. Snapchat, we are looking at you.

Set expectations

Misconceptions about AI are common. Some users may trust it too much, while others may be too skeptical.

Be clear about the system’s limits and capabilities. Focus on the benefits that users get, not the technology. Let people know what they can (and can’t) expect from the AI.

If the content is generated by LLM, it’s a good practice to be clear about that too.

Make your system explainable

AI should be designed so people can easily understand its decision process. It’s about explaining the decision process, not just justifying it.

Data behind recommendations

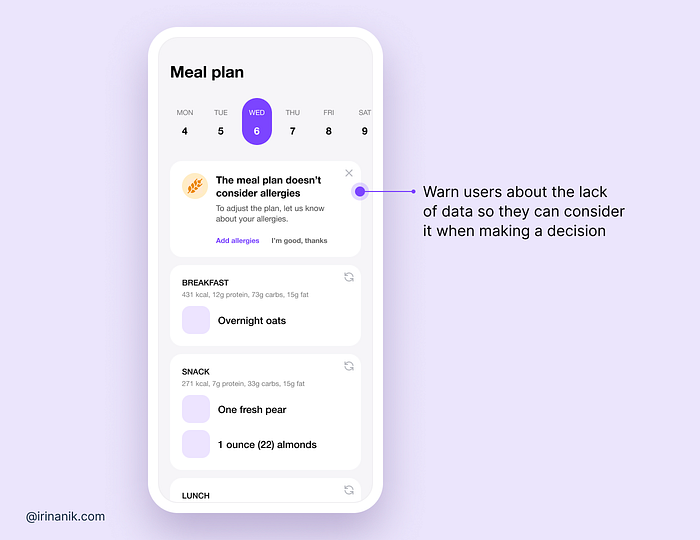

When dealing with sensitive decisions, AI must provide clear explanations of recommendations, including the data used, and the reasoning behind them.

Avoid overwhelming users with too much information at once. Consider progressive disclosure when designing for explainability.

Identify the moments where users need to use their own judgment because of the lack of data.

Confidence display

Instead of saying why or how we can show how certain the AI is about its prediction and the alternatives it considered.

The confidence can be presented as a numerical value, as a category, or as alternative suggestions.

If confidence levels don’t impact user decision-making, it’s okay to leave them out.

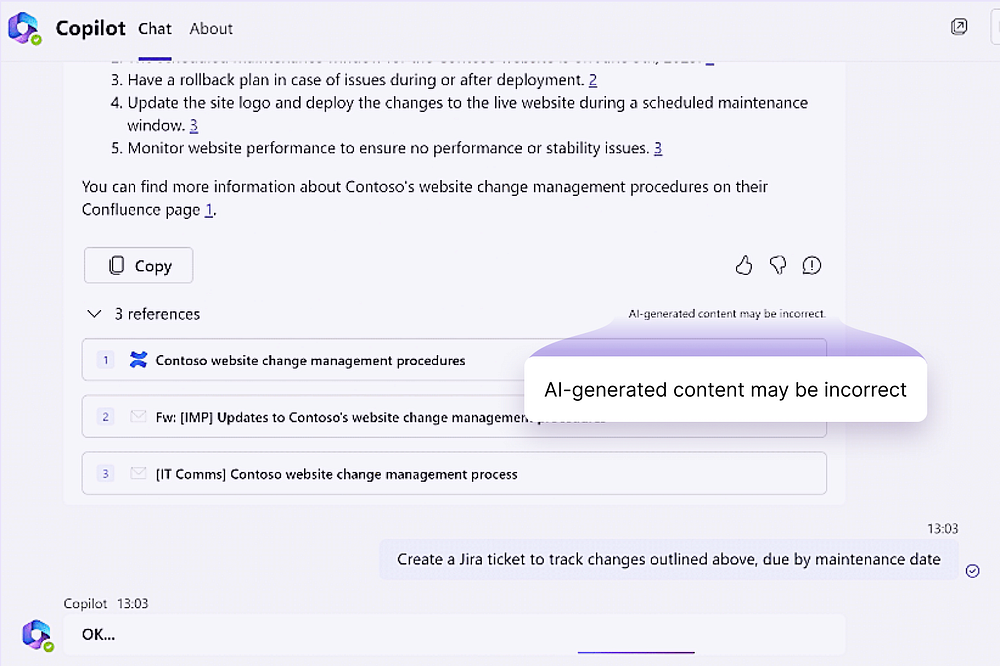

LLM explainability

Many common design patterns for trust calibration like confidence level don’t work with LLM. Explainability is not a strong point of LLM either. The answers should be grounded on data, but there is still a chance that the provided data is interpreted wrong or the important information is missing.

Make sure users can verify the answers with citations and sources.

An absence of a comprehensive explanation

Sometimes, users won’t have access to the full decision process, such as with financial investment algorithms.

There also may be legal, and ethical considerations for collecting and communicating about data sources used in AI. Be careful when deciding what information can be shared.

In this case, the best tactic is to be transparent about it.

Empowering Users with Control

Let users control the situation

In some situations human control is crucial. For example, if they are related to safety or health.

In such cases, Harvard researchers suggest focusing on helping the people make their decisions, designing for the process leading to this decision. Instead of focusing on providing users with AI end decisions. Even if they are explainable and interpretable: end suggestions add a cognitive load in critical situations.

For example, in aviation, the team of researchers found that shifting the goal of decision-support tools more toward understanding the situation and less on the decision itself may increase trust and reliance.

Another technique is to put a human expert in the middle. For example, instead of giving the predictions directly to the customers, let an expert in the company decide how to use AI predictions and what to tell the customer.

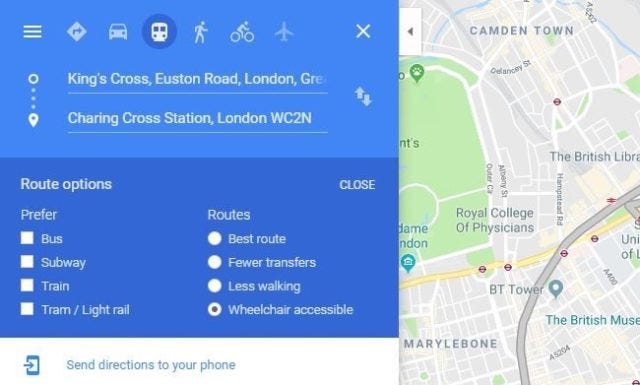

Let users control AI outputs

The interaction with AI doesn’t have to be a one-way street. Users can, and should, have the ability to steer the AI’s output to better suit their needs. Here are a few possible techniques:

- Changing preferences

With preference settings, users can tailor the AI’s responses to match their likes and dislikes. Let users tweak and fine-tune what they want from the AI. It’s important for AI to react to those changes to keep users' trust.

- Giving feedback and rating AI suggestions

By giving feedback or rating AI’s performance, users should get the capability to adapt AI systems to their needs and preferences.

- Opting out

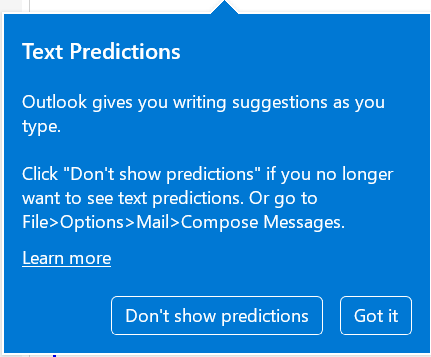

Making it possible to opt out of AI suggestions increases users’ freedom and control. Imagine a navigation app nudging a driver onto a faster route while they’re juggling passengers and traffic. It might be helpful, but dangerous as well.

Monitoring and Gathering Feedback

Regular check-ins on how our AI is performing, coupled with real conversations with the users are vital for successful implementation. The monitoring includes user behavior metrics as well as explicit feedback.

It’s not just about creating AI; it’s about evolving it, shaping it, and making sure it fits in where it’s needed. In the end, the aim is to keep improving, adapting, and ensuring that our AI-enabled tools are truly empowering the ones they’re designed for.

Sources:

- People + AI playbook, Google

- Guidelines for Human-AI Interaction, Microsoft

- UX: Designing for Copilot, Microsoft

- Design for AI, IBM

- Zana Buçinca, Alexandra Chouldechova, Jennifer Wortman Vaughan, and Krzysztof Z. Gajos. 2022. “Beyond End Predictions: Stop Putting Machine Learning First and Design Human-Centered AI for Decision Support.”

- Cara Storath, Zelun Tony Zhang, Yuanting Liu, and Heinrich Hussmann. “Building trust by supporting situation awareness: Exploring pilots’ design requirements for decision support tools.”

- Algorithms Were Supposed to Reduce Bias in Criminal Justice — Do They?, Boston University

- Angwin,Jeff, J. 2016. Machine Bias. ProPublica