Making the machine believable: Wizard of Oz-ing AI applications

How can we convincingly simulate the experience of a complex digital product for a tech-literate population?

Introduction

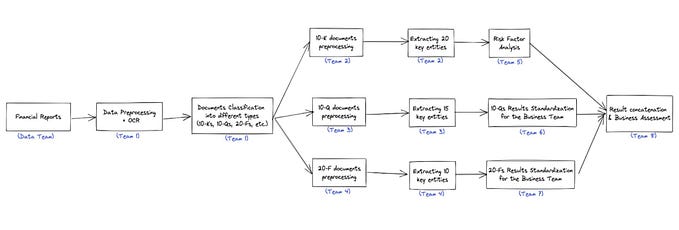

I recently had the privilege of leading design for an AI-based web application, which was developed at the University of Washington during an entrepreneurship course. Our product, Pitch.ai, is a public speaking assistant that uses AI to give users personalized & actionable feedback on their presentations and speeches. It lets a user record themselves practicing, analyzes the recording, and provides next steps to improving the presentation.

As the sole designer on a ten-person team of business and engineering students, I had the challenge of envisioning the product experience and supporting my design decisions with concrete evidence. In the end, the most powerful method I used was the Wizard of Oz (WOZ) prototype, which generated valuable design insights and helped me generate and sell a vision of the product to my team and to a cast of venture capitalists.

I learned a great deal about what can make the WOZ test great–and what to watch out for while crafting it.

The Wizard of What?

I was surprised to find that there really isn’t a good online resource for learning about this particular technique–it’s still mired in research papers and design research method books. And an online search for homemade WOZ tests reveals that students are misinterpreting the method–often confusing it with paper prototyping. Since there are no satisfying articles on the matter, I’d suggest furiously scrolling down to page 239 of Bill Buxton’s Sketching User Experiences, which you can read via PDF here.

The WOZ prototyping method aims to investigate how people interact with a system that doesn’t yet exist. It does so by faking the system: a participant should fully believe that they are controlling an interface that responds to them and affords a reasonable degree of freedom. The illusion of interactivity is conjured by a “wizard”, who controls the system’s back-end and responds directly to the participant’s input.

The method is especially helpful in scenarios where building the actual system is code-intensive. Gathering insights from real users before a programmer types the first lines of code will invariably lead to a more human-centered and time-sensitive solution.

How I designed mine

Our team started by conducting a Kano survey and competitive analysis to generate a list of possible product features. This is also where I started: with little understanding of the space, a spreadsheet of potential features, and a short amount of time to figure out what to do with them.

I needed to put the product in front of users, right now. I felt the best way to do that would be to Wizard-of-Oz-it.

TL;DR: I hooked up an external monitor to my laptop, and used a wireless keyboard and mouse to manipulate an HTML doc running on a local server on the laptop. The participants interacted with the HTML doc, believing it to be a real, functioning product–only I was simply updating code on my side and saving the document to send content over to them.

Here’s how I ran the session:

- I introduced myself and the project to the testing participant. The project was described as an early AI prototype that would be giving them feedback on their public speaking skills. I then conducted a short interview to learn more about the participant’s experiences with public speaking.

2. I had the participant select from a number of different cue cards with topics that they could talk about for two minutes.

3. The user starts a two-minute countdown timer on the interface, and then gives a speech on whatever topic they selected. While they gave the speech, I had time to write down custom feedback that explicitly related to what they were speaking about. I uncommented pieces of HTML and filled in my content, then waited for the timer to run out.

4. When the speech finished, the webpage automatically redirected to a loading page that said “calculating results”. This page remained until I commented out its lines of code and refreshed the page with my feedback (or the system’s feedback, as the participant saw it).

5. I had the participant talk through the feedback they received, analyzing their interactions and probing more when I heard something interesting.

6. I repeated the same test again, but gave feedback on a different part of their speech. The first test was voice feedback (like their word clarity, speed of speech, etc.), the second was body feedback (were they being expressive with their body language), and the third was on content (did they stray off topic, word choice, etc.). Each test was followed by a debrief on the feedback they received.

Interface elements

A live HTTP server running a basic skeleton of the interface

On the laptop is a locally hosted webpage with live-reloading capabilities. Using a bit of HTML, CSS, and JS, I created a quick set of navigable pages that the participant could interact with. The most important piece of the interface is the feedback section–during the speech, I could comment and uncomment specific pieces of code, and type in my own feedback. When I was ready to send over the content to the user, I just had to hit ⌘+S to commit the changes and automatically reload the page.

So why did I think this was better than other forms of “wizardry”?

Many WOZ test tools have a relatively low degree of feedback fidelity. Some researchers advance through a pre-determined slide deck, some set up Figma or Invision prototypes… but in cases where the product needs to give interpretive feedback on an unpredictable input, such as with an AI app, these tools fall short.

Additionally, I couldn’t use an existing platform and bend it to my own needs (another popular method), since my prototyping questions were so specific. Creating a quick prototype with HTML/CSS/JS afforded me a rapid environment to craft my own experience without the constraints that come with more standard WOZ tools.

Processing sketches

I used two readily-available Processing scripts on the right side of the screen as digital “props” in my WOZ experience. The upper script is a live video recording from the laptop camera, which tracks the participant’s face with a rectangle using the OpenCV (computer vision) library. I included this piece first to emulate the experience of recording oneself while giving a presentation, and second to make it feel like the computer was actively analyzing the participant as they moved around the space. The Processing script wasn’t actually hooked up to anything, or feeding data anywhere–it was just a piece of the experience that I believed would make the video analysis features more convincing.

Similarly, the bottom script is a simple oscilloscope that visualizes the waveform of the participant’s voice. This was intended to sell the idea that the program was also actively listening and analyzing the participant’s vocal qualities.

I didn’t need to include the Processing scripts. But the fact was that I only had to spend an extra 15 minutes finding these premade code snippets, and they helped construct the WOZ experience I was going for. If anything, a WOZ setup should be scrappy and rapid to assemble.

Takeaways from testing

The experience can be too good to be true.

One of the features I tested was a content analysis tool that could give feedback on the substance of what the participant was talking about. One participant remarked at the end of the session that she had very quickly realized the prototype was fake because “there was no way you have the technology to do that”.

Ironically the engineers knew how to implement the feature in question–but the participants’ perception of technological limits meant that the illusion of the test was broken. The feedback wasn’t as valuable because the participant didn’t believe that they were interacting with a real system.

Show your hand.

After the first failure, I thought about how I might make the experience more convincing. Many Wizard of Oz sessions involve a wizard who is in the same room as the participant and is introduced as the notetaker. To make the experience more believable, I decided to take that a step further–I pulled up a note-taking app on my computer, and physically turned my screen towards the participant as I explained that I would be taking notes during the session.

It was a simple gesture, but it helped establish that extra bit of rapport with the participant. When they saw me tapping away at a keyboard, they assumed I was writing notes, not controlling the program that they were interacting with. The remainder of my participants all believed that the system was real.

Good storytelling can set your participant up to give valuable feedback.

In my case, we wanted the users to know that our product uses artificial intelligence. Participants were generally more willing to suspend disbelief when encountering a technology that they were unfamiliar with.

I realized that the entire experience of the test session should be carefully designed: how is the project introduced to the participant? How much and what kind of context do you give before starting the session? What details do you leave out?

A carefully-crafted Wizard of Oz experience not only makes the system more believable, but it sets the mood for the participant to give thoughtful feedback and even engage in their own forms of storytelling. This is especially helpful for a tech-literate audience who are likely to be more skeptical of the prototype and therefore become more speculative as opposed to reactive with their responses.

—

Disclaimer: any photographs with people in them are reproductions of actual user testing sessions, using people who consented to the use of their photo in a publication.