BEYOND THE BUILD

Seven Key Steps to Running Hypothesis-Driven Experiments Using the MVP

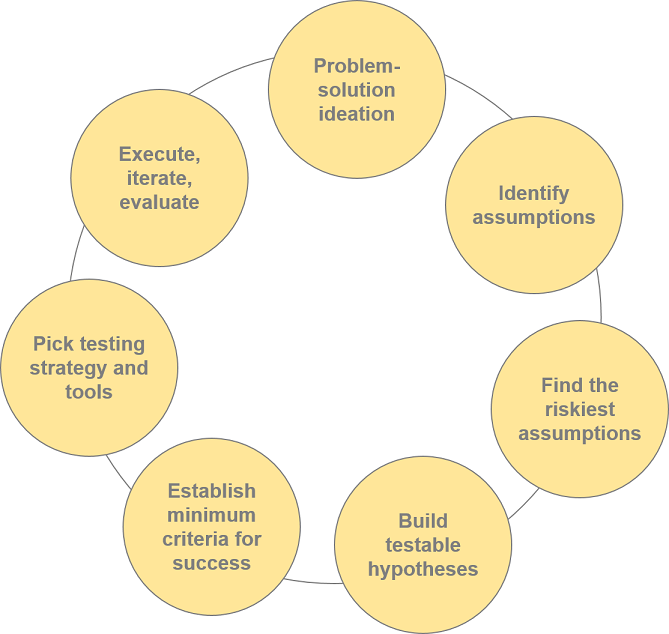

There are seven steps to running hypothesis-driven and validated learning experiments using an MVP: 1) Problem-Solution Ideation: Identify core customer needs and prioritize ideas by digging deep into pain points. 2) Identify Assumptions: Outline all assumptions made about the product or feature, including customer problems, matters of importance, substitutes, and payment willingness. 3) Find the Riskiest Assumptions: Prioritize assumptions based on their potential impact on the business and focus on the riskiest ones. 4) Build Testable Hypotheses: Convert assumptions into actionable hypotheses by defining target groups, potential problems, drivers, and expected outcomes. 5) Establish Minimum Criteria for Success: Define and quantify expected outcomes to drive MVP experiments toward business results. 6) Choose Testing Strategies and Tools: Select methods to control for bias in MVP testing environments, such as emails, fake buttons, 404 pages, explainer videos, fake landing pages, concierge service, piecemeal, or Wizard of Oz approaches. 7) Execute, Iterate, and Evaluate: Gather quantitative and qualitative data to create buy-in with stakeholders, and based on the results, decide to invest, scrap, or pivot the product or feature.

Welcome!

If you found this article helpful, be sure to follow me on Medium to stay updated on and explore my publications.

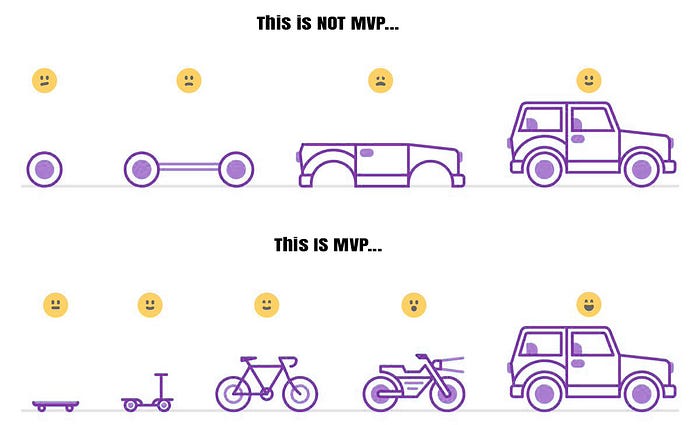

The term MVP, which was first coined by Eric Ries in his book, The Lean Startup, is a widely accepted concept, where product-centric startups and organizations follow its guidelines religiously. According to the book, a minimum viable product (MVP) is the version of a new product that allows a team to collect the minimum amount of validated learning about customers with the least effort.

In other words, an MVP is the smallest version of a proposed product that could be pushed out to the market to gather real user feedback and interests to take granular and validated steps forward. MVPs are built to test assumptions and hypotheses related to the value propositions the product is pursuing.

The MVP is a scientific process that aims to measure and test hypotheses, under the principle of ‘validated learning’. Validated learning is a controlled testing environment or simulation where the participants are exposed to natural stimulants, and their responses lack bias.

For example, when Zappos started to sell shoes online, they made a simple website, went to the closest shoe store, took photos, and uploaded them to their website. When orders came in, they would go to the shoe store, purchase the item, and ship it to the buyer. This cheap, controlled, and unbiased MVP experiment validated their idea of selling shoes online.

In short:

- An MVP is about idea validation

- The MVP helps mitigate the risk of investing upfront capital and building the full service you are imagining as an offering for customer pain

- MVP enables the execution of multiple numbers of experiments in short periods, increasing the chance of finding product-market fit

The risks of experimenting with the MVP

The risks associated with developing MVPs are lost financial resources, time, and opportunity costs. Based on this, it is understandable why startups use MVPs — they lack resources, and due to high rates of failure, they are risk mitigators by nature. In established organizations where there is more tolerance for risk, the product manager exists in the traditional definition — building, launching, and validating new products or extensions of the current working products through MVPs.

The higher the risk tolerance of an organization, the more diverse the set of products it will be testing. For example, here is the range of products Google has tested during the past few years. A product manager at Google will be experimenting with several MVPs across a range of markets. As long as the search ads are making large amounts of money for Google, it will continue to experiment with a large number of MVPs in the market.

However, the downside of testing many products at a large organization could be potential damage to its brand. But if you think about it strategically, it isn’t always negative. For example, as a product manager at Google, would you have launched Google Glass? While today you might say, “No,” Google benefited greatly from the knowledge it acquired from this experimentation, and it got a vast amount of press and buzz marketing, as it tried to push the industry envelope, further enhancing Google’s brand. Elon Musk follows the same mindset with his experiments, only he guides the buzz marketing to his brand rather than to a specific company.

Seven steps in running MVP experiments

There are seven circular steps to running hypothesis-driven, validated learning experiments using an MVP:

- Problem-solution ideation

- Identify assumptions

- Find the riskiest assumptions

- Build testable hypotheses

- Establish minimum criteria for success

- Pick testing strategy and tools

- Execute, iterate, evaluate

1. Problem-solution ideation — uncovering core customer needs

New product and feature ideas and requests usually come from four places:

- Employees: from your colleagues, boss, and yourself.

- Metrics: the problems and inefficiencies you find when you’re looking into how users use your product. For example, data on how users navigate through your app indicate a lot of people spend one second in a particular area and immediately go somewhere else. This gives the idea that this is an unclear section of the app, and you need to redesign it, or at least, go figure out what’s going on, and try to fix it.

- Users: This is user feedback from forums or emails to your company and social media. It’s everyone out there who uses your product.

- Clients: This is a special case that applies to SaaS products.

Let’s say you have an ample number of ideas, and now it’s time to prioritize them by digging deep into the core pain points for which people are asking for a solution. In the world of product management, people are constantly asking for features, and at first glance, they seem reasonable, but they are only symptoms of the main problems users are having behind the scenes.

As product managers, you need to find a solution to a problem, rather than fitting a problem to a solution your team might have thought of. When deciding the go/no-go policy for a feature or product, ask these questions:

- Is this solving a problem? Use the “5-whys” model to get to the bottom of the reason a feature is needed. Most of the time, new features can be solved via optimization or UX changes of current features.

- Will the new features have any unintended side effects?

2. Identify the full range of assumptions

At this stage, you have an idea of what you are trying to build and you know your target customers and the solution you are offering. With any new business idea or new features, the product team will be making a lot of assumptions, and even those assumptions are made up of smaller assumptions. For example, when you are getting out of your house in the morning to drive to work, you are assuming the engine is working fine, you have gas, and the cold has not frozen the water in the cooling system. However, there is no guarantee those assumptions are true, and when it comes to testing an MVP, we have to outline assumptions to the extent possible.

When you are testing a new idea, it is based on observation, intuition, and a hunch, and therefore, many assumptions are made. To identify these assumptions, try to think of it as a scientific experiment, whereby nothing is valid until proven so in a controlled and unbiased experimental environment. Anything you think you know is just an assumption, so you have to question everything you are doing and try to validate it way before starting to build something.

In general, product managers deal with four general categories of assumptions:

- The customer has XYZ problems. For example, if you are building Bluetooth headphones (e.g., Apple AirPods), you are assuming people are fed up with cords.

- Something matters to the customer. Any new feature means you are assuming your customers care about that offering. So if you are going to offer something cheaper, you are assuming people care about it becoming cheaper. If you are building a product with better convenience, you’re assuming convenience is a big factor in the service.

- There are no other satisfactory substitutes. Most often, product managers assume their service has no substitute, or their quality is so superior that people would be willing to take it on or switch service providers.

- Someone will eventually pay for the service. Users will pay for it or sign up to eventually pay money later. The assumption here is the pain is big enough for people to be willing to pay for the solution.

Let’s walk through the potential assumptions Uber had to work with when it was launching in San Francisco in 2009. Its problem-solution statements were as follows:

- Problem: It’s very difficult to find a cab in downtown San Francisco — it takes +30 minutes in peak times

- Solution: Find a cab closest to you in less than a couple of minutes from your smartphone

In the statements above, there are plenty of assumptions being made, such as:

- There is a lack of supply of cabs

- In off-peak times, it takes less than 30 minutes

- People are unhappy with the current situation

- The average times of finding a cab and the arrival of a cab are not known

- Nothing is said about areas other than downtown San Francisco

- Nothing is said about cities other than San Francisco

- You need a smartphone for the solution to work

- Enough drivers have smartphones for operations to scale

- People will trust getting in a taxi via their smartphones

- Service will be on-demand to find a taxi in a couple of minutes

I’d love to hear your thoughts!

Share your insights and feedback in the comments below and let’s continue this discussion.

3. Find the riskiest assumptions

After listing assumptions, the product manager needs to prioritize assumptions and focus on the ones that would hinder the business most if they turned out to be false. To prioritize assumptions, use the table below:

4. Build testable hypotheses for your assumptions

Assumptions are a rough list of things product managers think need to be true for the product to succeed. They are neither precise nor actionable. To move from theories to action, we need to build hypotheses. A hypothesis is a single written statement that needs to be true for the assumption one holds. To build hypotheses for an assumption, answer the following questions:

Example assumption: People are unhappy with the current situation.

- Target group: Who, exactly, will be unhappy?

- Potential problem: How unhappy are they?

- Action/drivers: Why are they unhappy?

- Expected outcome: What will change when the solution is offered?

You can place your hypotheses into the following hypothesis statement builders:

- For a startup, where founders are launching a fresh product: We believe [subject/target audience] will [predicted action] because [underlying reason/driver].

- For an established product, where you want to test new features or user interests: If we [action], we believe [subject/target audience] will [predicted action] because [underlying reason/driver].

- For managing complete and complex products, such as an e-commerce platform with +5 years of market presence: We believe [subject/target audience] has a [problem/pain] because of [underlying reason/driver]. If we [predicted action], the [metric] will improve.

5. Establish minimum criteria for success

MVP experimentation outcomes fall into one of the following categories:

- Proved false and not worth pursuing

- Fully true, sticky with customers, and needs to be pursued

- Falls somewhere in between

90% of the time, MVPs end up somewhere in the third category, somewhere in between — quite a vague situation. To reach clarity and meaning in efforts, we need to be able to successfully define and quantify outcomes we expect to see to drive MVP experiments toward business results.

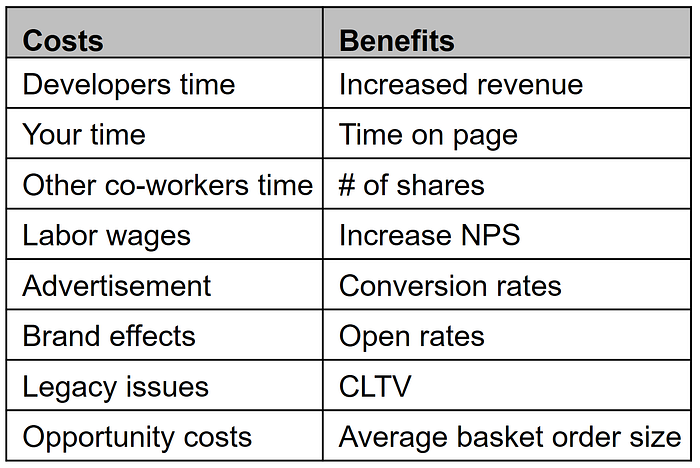

To conclude, we’ll need to perform a cost-benefit analysis. Wherever the benefits exceed the costs, that hypothetical MVP could be invested in, to become a fully functional product. Here are some things you could consider for your cost-benefit analysis:

When you already have a working product with users, you will be less sensitive to many risks and know your metrics easily. However, in a startup setting, engagement levels are low, people are rarely signing up for the service, and founders are figuring out whether the product should exist.

In a startup, try to look for signals that answer the following:

- Is it a good idea overall?

- Does it meet user needs?

- Does the problem exist?

- Are users using the solution?

This could translate into metrics, such as:

- % of people who sign up

- % of people who share posts

- Average purchase price

- Number of users who open your emails

Tip 1: Focus on one metric at a time.

Tip 2: Focus on current customers, and assess the performance of relative metrics.

6. Choose testing strategies and tools

In MVP testing environments, you will need to control for bias, so users do not know they are being tested. There are a couple of methods for this.

- Emails — to test demand for a product, you can send emails to customers. All you need is an email client, a user base, and basic communication skills. In a startup setting, the founders need to round up target-customer email addresses first. The pros of email marketing or email testing are that they are quick, cheap, segmented targeting of users, and easy to implement. The cons could be, might dent the company brand and might come off as sloppy. When it comes to emails, be mindful of audience expectations, such as luxury or quick-fix solutions, match the quality of the email templates your marketing department already sends, and try to pair your emails with a landing page or concierge service.

- The fake button — the “shadow”, “fake”, or “coming soon” buttons are made to observe people’s behaviors and reactions. They are often used in mid-size startups trying to test the stickiness of new features against each other. Shadow buttons are very subtle. These buttons are generally used for new products with a small number of users. They are easy to implement and gather large amounts of data, but they carry brand risks for larger organizations. The product manager will need to think about users’ tolerance for this method. Try to link it to a landing page, or have a fake call to action to reduce high user churn.

- 404, coming soon and pre-order pages — The 404 page either says the server is not responding or links back to an old expired page. On the coming soon page, it gathers customers’ contacts for later follow-ups. Amazon uses this regularly because it has a diverse range of offerings and billions of pages, which is understandable for customers. Pre-order pages are the same as coming-soon pages. You’ve seen them before, but you may not recall. You don’t even know it was a test. Coming soon pages are everywhere these days and are nothing out of the ordinary, so they carry little risk.

- Explainer videos — that show what the product does. The format can be either a tutorial or a sales pitch. In a tutorial style with screen-casts, the product does not exist yet — the screens are animations. This is how Dropbox started — a fake video was published on YouTube, and the response was off the charts. In the sales pitch video, the product is pitched and explained, usually, the actual product is not available, and it only tests demand. Explainer videos work because genuine products will have associated videos that explain the product anyway. Videos convert better in sales, and they provide better in-depth descriptions of the product and excite users, but they could be time and resource-exhaustive.

- Fake landing page — a single page that pitches all the features of a product followed by a call to action. This is the same as an e-commerce website’s page that aims to gauge demand by announcing, “iPhone X coming soon, pre-order.”

- Concierge service — this method provides a 1-on-1 service, where someone walks the customer through a task. In this method, there is a beta version of the product, and the product manager manually monitors user behavior and response to the product. This method helps the product manager gauge the internal reasoning of the customer, but it consumes a large number of resources per customer. It is best advised to be run parallel to other experimentation methods and used for early-stage startups and luxury items with mountable gross margins.

- Piecemeal — in this testing system, the finished product is broken down into sub-systems, such as subscriptions, email service, etc. Sub-systems are outsourced to specialized third-party providers. A fully functional-looking product is launched and tested for feedback. This is how Groupon launched its initial services, outsourcing its massive e-mail campaigns. A major challenge with this method is getting various third-party software to sync and work seamlessly with each other.

- The Wizard of Oz — here the front end is fully invested in and looks highly appealing, but everything on the back end is rudimentary and manually working. This is how Zappos started. This is a very resource-intensive method, but it is protective of the company brand.

7. Execute, iterate, evaluate

The aim of running an MVP experiment is to gather quantitative (what customers did) and qualitative (why they did what they did) data to create buy-in with stakeholders, whether they are internal decision-makers or external investors. The MVP is the tool that will help you discover customers. Results of MVP experimentation fall into three categories of decision:

- Do you invest in the product, and give it the green light?

- Do you scrap the whole idea?

- Do you pivot, shift, or change bits of the hypothesis, and push on?

And that is when the loop of “validated learning and experimentation” repeats itself over and over again.

A minimum viable product (MVP) is the version of a new product that allows a team to collect the minimum amount of validated learning about customers with the least effort. There are seven circular steps to running hypothesis-driven, validated learning experiments using an MVP:

- Problem-solution ideation

- Identify assumptions

- Find the riskiest assumptions

- Build testable hypotheses

- Establish minimum criteria for success

- Pick testing strategy and tools

- Execute, iterate, evaluate