How behavioural sciences can help build a better chatbot experience?

Exploring how identifying different modes of user thoughts can help create better conversational experiences. Thinking fast and slow.

In Daniel Khaneman’s book titled ‘Thinking, Fast and Slow’, the professor introduces the idea of how our behaviour is determined by two modes of thoughts in our brain — System 1 and System 2.

System 1 is where our minds are involved in activities that are automatic and impulsive. These are activities that we not very conscious about. They happen subconsciously. They may seek attention if something goes awry. But largely, they remain under the radar. This system engages in the task really quickly and uses existing patterns in our memory to solve them.

For instance, think about how do you tie your shoe laces now. More often than not, you do it subconsciously. You just wear your shoes and walk out the door. Don’t you? Do you stop to think about your shoe laces? I bet you haven’t thought about you shoe laces in a long while. But it is still you who are doing it every single time. This activity happens under the radar because you have learned how to do it and stored that algorithm in your head and since it the same sequence of steps every time you really don’t need to be conscious about it. Agree?

System 2 is the opposite. It is the system that involves conscious logical thinking and decision making. It engages slowly, deliberately and carefully. It is the system that takes care of activities that require focus and most mental effort. If you are trying to find a person in a crowd, solve a difficult math problem or navigate through a new geography.

Go back to when you were a child. Imagine you are doing the shoe laces for the very first time. You have seen your dad do it many times. He even taught you how to do it. So you wanted to give it a try. Now every step you take is conscious. You may get confused and frustrated. And still you may make a conscious decision to not give up. And when you have finally succeeded, you do get a sense of achievement. This feeling will reinforce the sequence of steps you needed to reach the goal state — tied shoes. System 2 is conscious. It helps us learn.

What has this got to do with chatbots?

Your chatbot, just like your shoes, provide users an experience.

Users utilize their systems 1 and 2 to interact with chatbots the same way they tie their laces. How?

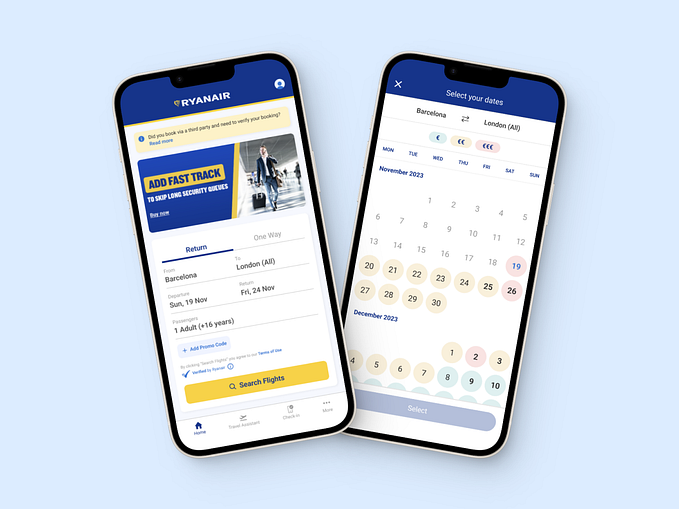

When you interact with a chatbot for the very first time, there are a lot of unknowns. How does it work? What can I ask? Is it really intelligent? Will it take over jobs? Etc.. System 2 kicks in. Your first few interactions are conscious and careful.

Then as you get comfortable and you know what it can do and what it can’t do, your system 1 will kick in. You know the best way to ask for stuff. You are pretty confident. You know the sequence of steps to reach your goals. The algorithm has been discovered, validated and optimised. Now system 1 can do it in autopilot.

I am not saying all interactions after the initial period will be dealt with by system 1. But most mundane ones will be. If the user wants to ask something important, that would probably be a system 2 conversation. But if he buys his travel ticket for everyday commute, then it’s probably done via system 1 due to its low monetary value and frequency.

Users may engage chatbots using both systems — System 1 and System 2 but different points in time.

So how do we design our chatbots to deal with them?

Let us explore.

System 1 is the automatic, instinctive system. When the user is using this system, he or she may be engaged in some other primary activity — driving a car, anticipating the arrival of a train, cooking, speaking to a friend and so on. He may be asking the chatbot a simple question and is expecting a simple and straightforward answer. Therefore the chatbot needs to keep the conversation simple and under the radar. Remember how some say, ‘Good design is invisible’.

When System 1 is at play, the objective of the chatbot must be to keep the conversation under the radar.

The conversation flow needs to follow a pattern that the user is already familiar with. Any new element will seek the interference of System 2 and that is not going to go well with the user. The user is going to be frustrated if the chatbot asks him a question or make a remark that makes him think too much or hard to understand. That is because he will be distracted from his primary task.

System 2 is slow, effortful and conscious. When user’s system 2 engages a chatbot in conversation, it is the user’s primary task. You have got the user’s full attention. You need to bring on your A-game. The aim of the chatbot here should be to make the best use of his attention and delight the user. For frequent conversational tasks the user engages in, the chatbot could add some humour to them. The chatbot can introduce new features that the user might like. It can also elicit feedback on new features as well as its performance.

When System 2 is at play, the aim of the chatbot must be to delight the user.

It is also the time, the chatbot cannot afford to do anything stupid, as the user is consciously watching. However, mistakes may be forgiven if the chatbot can quickly and skillfully recover. Another potential issue could be that the user might notice if there are any personality flaws in the chatbot’s character. Make sure that the chatbot is displaying consistent character traits throughout and across conversations. It can’t at one time be very verbose and other times be succinct. It can’t choose to be extroverted sometimes and introverted at other times. Discrepancies in personality traits could get noticed when System 2 is in play.

The next obvious question

And the next obvious question then would be — how will the chatbot know which system it is talking to? I must admit, I don’t have a correct answer. Although I have some ideas. One heuristic that can be applied is that of usage. Is the user relatively new? If so, she could be using System 2. If not, she could predominantly be using System 1 for her most frequent tasks. With such users, the chatbot could use probes. Try some humour and see if there is response. Do something clever and see if the user notices. Notice if her behaviour is different from her usual self. These might help determine which of the two systems is engaging the chatbot.

I believe there is a lot we can do to create great conversational experiences if we further understand how conversations work when the two systems are at play and how to identify which one is currently engaging the chatbot.

I am eager to hear your thoughts. Please comment.