Member-only story

What we can learn from Alexa’s mistakes

Design challenges for conversational UIs

Conversation. The key to human-to-human interactions; a spectrum ranging from cavemen around a camp-fire, to lengthy political debates, through to awkward small talk with your dentist. Something that we've really got sussed - we quickly make decisions about whether someone is interesting, not paying attention to us, if we want to date them or employ them, based on it. If we want to get something done, we talk about it - it's how we transmit information and transact between people.

So, it just makes sense that we would use conversation to interact with services and products - right?

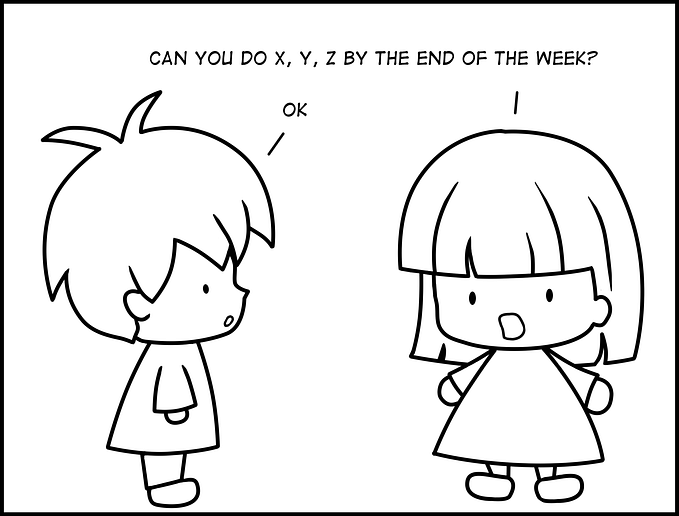

Right. But it turns out there are some challenges about creating an artificial conversation counterpart, especially one that can access your finances or send a message to your boss. These challenges, which have long been resolved for human-to-human conversation, have yet to be fixed to the same extent in conversational interfaces.

Conversation is the interface we all know, so it is unreasonable for the interface designer to expect the user to have to re-learn it in order to interact with a service... it is on the service to understand however the user chooses to naturally converse with the system.

Here are some interface challenges, highlighted by Amazon Alexa's recent boom in popularity:

Authentication

⚡ "Who's speaking?"

In a story on a local news show, the news anchor imitated a girl who accidentally ordered a dolls-house via a conversation with Alexa... Hearing this as a command, the Alexa interface in the homes of many viewers responded by trying to order a dollhouse as well...

“I love the little girl saying ‘Alexa ordered me a dollhouse”

Authentication is essential in transactions and interactions with services: we expect reasonable safeguards — especially when paying and logging in. When dealing with our money and personal information, we need to be careful to…