Whose delight? Avoiding bias in UX research and design

Credit: AirBnB, https://www.wired.com/story/jennifer-hom-illustrations-airbnb/

Through technology and design, we have the opportunity to be shapers, recipients and advocates for products, brands and services. Screens can now be touched, inviting cross-sensory experiences. House keys may become obsolete. Chat bots adapt themselves to our voices and accents. Our preferences are recognized by thousands of algorithms, guiding us through our digital and analog lives. Apps and devices promise to transfigure our bodies, minds and souls by making us happier, fitter and more productive. It seems that there are no boundaries to human cognition when it comes to technological affordances.

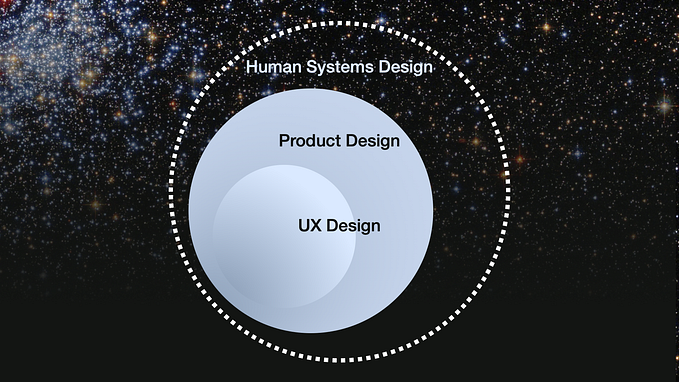

And yet, as both researchers and designers, we find ourselves prone to both cognitive bias (confirmation bias, framing effect, sunk cost fallacy) and cultural bias (lack of consideration for power imbalances in our society). Whether in-house or working with clients, we take over the culture of our own company or of the company to which we sell our services. In general, the visuals in research deliverables are often populated by a stock-image bank imagery, catering to a rather monolithic demographic, calibrated around white urban middle-class. This narrowness extends from bodies to objects: more often than not, our decks would include an image of an Eames chair, pour-over coffee, and a really big desk with nothing on it. Invariably, we seem to design for tech-savvy, able-bodied, mobile users in the height of their intellectual capacities. Even though terms like inclusive design are being heralded by tech companies, compliance with Web Content Accessibility Guidelines — making content accessible to a wider range of people with disabilities, including blindness and low vision, deafness and hearing loss, learning disabilities, cognitive limitations, limited movement, or speech disabilities — is not always first priority for us as UX designers.

How to un-bias our own practice? As we strive to create technology that is as inclusive as possible, considering all possible levels of empowerment and vulnerability is crucial. We wanted to start small, from our respective positions as a UX researcher and Experience Designer. Talking to colleagues, we gathered some practical examples that helped us two map out the process of un-biasing. We list them here:

Self-awareness: Position yourself in your research/design. Decide to what extent your gender, age, race, ethnicity, education, sexuality, geographical location and socioeconomic status influence your standpoint. Think hard about the resources you have available, which you often take for granted: cutting-edge technology, financial resources, transportation, network, degree, able body? Now think about your users. Did you correct any assumptions you were making?

Exchanges with clients: We cannot always choose our clients. If possible, aim for a diverse representation among client stakeholders and diverse decision-makers around the table at alignment workshops, kick-off, and recurring moments of contact. Be vocal about being interested in hearing voices other than COO.

Recruitment: Recruit diverse participants for stakeholder interviews, CIs, user interviews, validation testing (think in terms of gender, ethnicity, age, tenure, ability, socioeconomic status). There is no user experience without a body — if we want to imagine diverse kind of experiences, we must consider diverse kinds of bodies.

Developing personas: Design to the person with the most needs first, and others will benefit. When doing a persona workshop, remind the client of their potential assumptions and/or blind spots, and encourage them to transgress these. Be gender- and race- neutral in texts and imagery you use; for example, use drawings that do not reveal too much demographic information. Don’t use demographics to back up your claims; at the same time, do not whitewash your personas — leave them their worries, their restraints, things that weigh them down, needs that might differ from other users.

Journey maps / service blueprints / workflows / user scenarios: Don’t forget what can go wrong — a user’s journey is rarely a linear path to success. Talk about unhappy paths, glitches, drawbacks and obstacles, which are often culture- and socioeconomics-driven.

UX/UI Design: Create affordances by actively identifying possible points of exclusion (e.g., color-blindness or deafness), offering solutions (closed captioning, transcription features). Make sure texts and commands are comprehensive and easy to follow, and provide access to support. Avoid technical jargon.

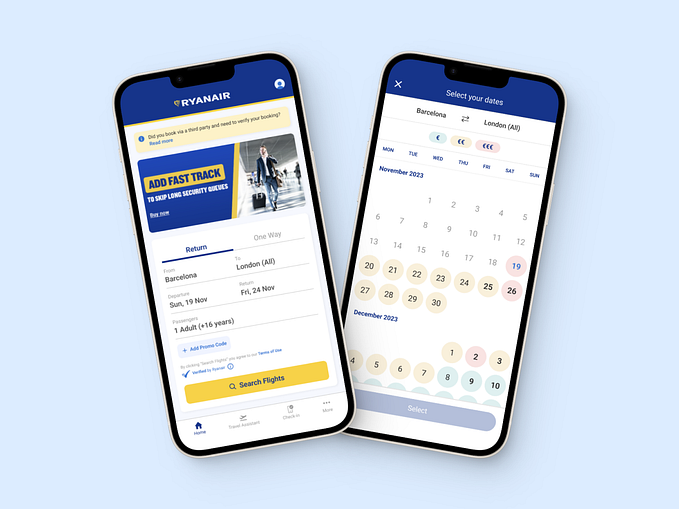

Validation testing hardware: Test on low-end devices, Android, and bandwidth. Remember, not all the users have access to high-end appliances; consider situational challenges (low-resolution screens, noisy environments). Does the experience still work when translated into different context?

Deliverables: Aim for a diverse and inclusive user representation in visual imagery. Check photographs of your own team or client team you include in your deck for the same. Identify common places where it is easy to fall into traditional gender roles: look at the photographs capturing one character explaining something to another, one character leading other characters, or two characters in conversation (who is leaning in?).

Connections and collaborations: Go out of your way to be exposed to designers and researchers that promote diversity. How about attending conferences, meetups and networking events like Change Catalyst, AfroTech, UnidosUS, Lesbians Who Tech, or Code2040 Summit? Are your in-house shadowing and outreach programs concerned with equal opportunities and disempowered communities?

Finally: Remember that it’s not just about checking boxes off this list. It’s about knowing your users, understanding what their challenges and accessibility are, and adjusting your research and design accordingly.